Live video streaming stack from Home

Our subject

This post relates my research about how to live stream some video playback to some public guests, all using a custom stack hosted at home.

There exists tons of technologies about that point. We won't detail everything but once again, what I did with my constraints, my needs, for myself. So the choices I made are related to my usage, but I share them to you here.

With just two softwares, and few clics or lines of code, anyone can live stream anything. But that usualy requires two concepts I don't share : using a public streaming relay provider, like Twitch or Youtube (or others), and, using a 15-year-old video compression technology, that is superseded nowadays by way better ones : H264.

So, live streaming video/audio content, not using a public platform, and not using H264. You are with me ?

Spoil : That's not as easy as it seems ;-)

Introduction

Today, live streaming is something mastered. By live streaming, I mean "sending in real time some video and audio content to some souls receiving it and playing it back on their devices". Everyone can make use of some public platforms. I myself use Twitch. Youtube, Facebook, Periscope are other ones I don't use.

But relying on some foreign service usually means you don't master the stack, and you have to comply with the streaming service guidelines. You can't stream any content you want, the streaming platform is free to manipulate your content, often by inserting ads to it, or even to monetize it... I don't like that, you may know that I like to be free, as the Internet is a free world where anyone can exchange some content of any kind.

Also, if the streaming platform is down, your stream is down. Logical, but just pointing you that adding a dependency makes you rely on that later. I always try to prevent that, or to limit the dependencies to vital ones : energy (I dont produce my energy), network provider (I don't own my Internet provider), technologies (I did not create my own video format). That should be all.

Yes I know, I go in the opposite side the current status goes. But I like to build and understand things by practice also.

What is live streaming ?

Live streaming is one publisher pushing some live content to one end : by using unicast.

By live content, we assume one video and or one audio stream, muxed into a container.

So, you want to read my stream ? Give me your IP address, make sure the path between you and me is open (your firewall or NAT system), and you'll be delivered the content. That's it.

Assuming the consumer stack can read the content (understand the format) and the network is not filtering and strong enough between the publisher and the consumer then it will just work. But that scenario is not very useful and practical right ?

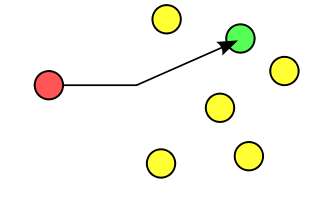

That's why we use intermediates (middlewares). To end up with a schema like this one :

In this schema, consumers ask for content. The server then forwards the publisher-constant-feeding-stream, to the asking consumers.

The RTMP stack

Today, streaming platforms make use of established, normalized, stable - and sometimes pretty old - bunch of technologies. Mainly the RTMP protocol, using the FLV container encapsulating some H264/AAC content.

This represents the big picture of today's live streaming over the Internet (2019).

One publisher (a streamer) will send over the Internet some streaming content to the middleware platform, itself will manage and host that content so that many clients, the consumers, will be able to get it delivered. Something like this :

Like you can see, the RTMP server is the key here : it receives a published stream and allows clients to fetch it by connecting them to that latter. In fact, the server relays the stream flow, by duplicating it and sending it to the clients.

Keep in mind the direction of the streams : clients ask for some content to a server, which one answers with such a content itself is getting delivered by one source, all in real time, or nearly.

So the RTMP server acts as a barrier : the bandwidth between the publisher and the server should support just the publisher stream flow, whereas the bandwidth between the server and the clients should be as strong as number-of-client multiplied by the stream flow. Aha! One starts understanding that the infrastructure of those streaming platforms is huge, yes, and that's today's Internet problem of asymetring a system that's been designed to be barely symetric or balanced.

Also, RTMP allows the provider to filter who access the stream content. Read the protocol, it embeds concepts such as authentication, that allows the provider to monetize the stream access, or to restrain access to it.

The RTSP/RTP/RTCP stack

A few words about some other technologies. RTSP/RTP/RTCP : those 3 protocols are usually used in IPTV delivery and require that the network stack from end to end is mastered (using PIM and IGMP on intermediate routers to multicast the stream). Not really what we want here, that involves designing a complex end-to-end infrastructure to multicast the stream. That's mainly what ISP use to push you the TV streams.

Let's stay in our scale, at the unicast scale.

The HLS and MPEG-DASH technologies

I will make use of them, but I did not yet, or at least not enough to give you my thoughts.

HLS and MPEG-DASH are very close one to the other, they are barely the same.

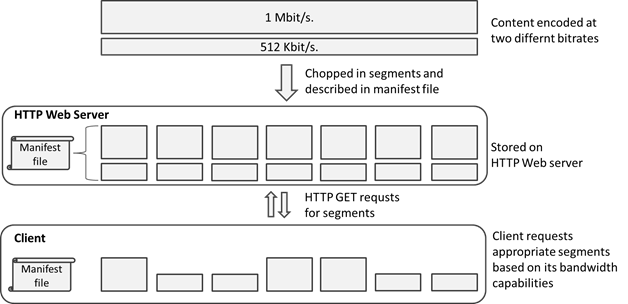

The stream publisher still publishes in real time some content to a server, often still using RTMP, but this latter server will now cut the content in several chunk files, generate a manifest, and publish the manifest to the clients.

The clients ask for and read the manifest, and then download each chunk referenced in the manifest one after the other, in correct order, thus ending reading the stream content.

The advantage is technologies involved : clients just need a web browser as all goes through HTTP, but the browser should support the video and audio decoding algorithms (codecs).

Also, the server can generate several streams of several qualities and resolutions, and the client can ask for one or other after having measured the bandwidth between itself and the server. It is adaptative, as the clients can switch from one stream quality to another, as the available bandwidth evolves during time.

So this is nice for mobile technology but less suited for not-mobile devices, such as a PC or a laptop, that can afford firing an RTMP client and read the stream by itself, directly. Also, mobile technologies tend to experience less reliable network connection, and thus would need adaptative streams like HLS. A fixed PC does not : it knows the bandwidth it has access to, and thus it can ask itself the content quality it wants.

Because, cutting/splitting and eventually recompressing the stream has one drawback : it is not live anymore. You pay a big delay penalty. The delay varies between 10 to 30 seconds, between the time the publisher sends its content to the server, and the time the client can read it. Not really real-time streaming anymore right ?

I did experiment HLS, but a little bit. I'll update the content here, or create another blog post about my experience with HLS and DASH.

Other technologies ?

That's only an introduction. I don't know or master all the stack, all the details, etc... but I provide here enough info for the curious souls to fetch more about the topics of interest. This article, for example ?

So many of them describe the world of streaming audio/video content in real time !

Let's start by the end

My need is to live stream some video games content (pretty much like on Twitch), or more generally live stream my computer screen to some clients/friends/familly etc... At least in 1440p and why not underscale at 1080p or lower to suit slower/weaker clients needs.

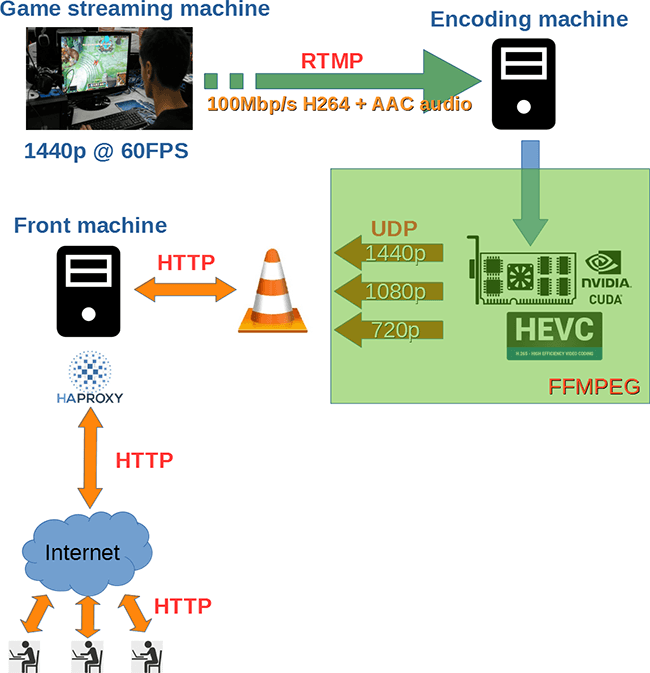

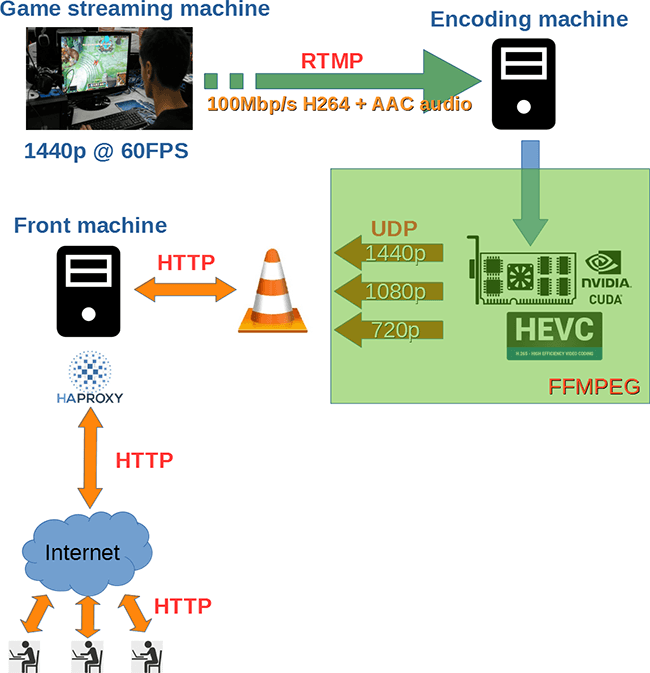

Here, is what I ended with, that works :

The stack is not very different than from a stream provider (its just scaled to a tiny stack), but all is self hosted and done by me, mainly using open source softwares.

Like you can see, I barely not use RTMP. Why ?

Because RTMP can only embed FLV, and that pretty old format is not compatible with the lastest video compression technologies, aka H265/HEVC or VP9 or AV1.

I will publish a future article about how to use RTMP to stream content. That is easy, it basically requires a capture software such as OBS, an RTMP server like Nginx, and you are done. "Copying" the basics of streaming provider stacks, is easy today. I managed to do that in few hours. Or one full day if you want to push the concepts.

But going further, with better technologies and stacks, is not that easy.

Video compression technologies

First, you may recall that video format is not the same as container format

If you read those pretty well designed Wikipedia articles I just linked you, you get the picture.

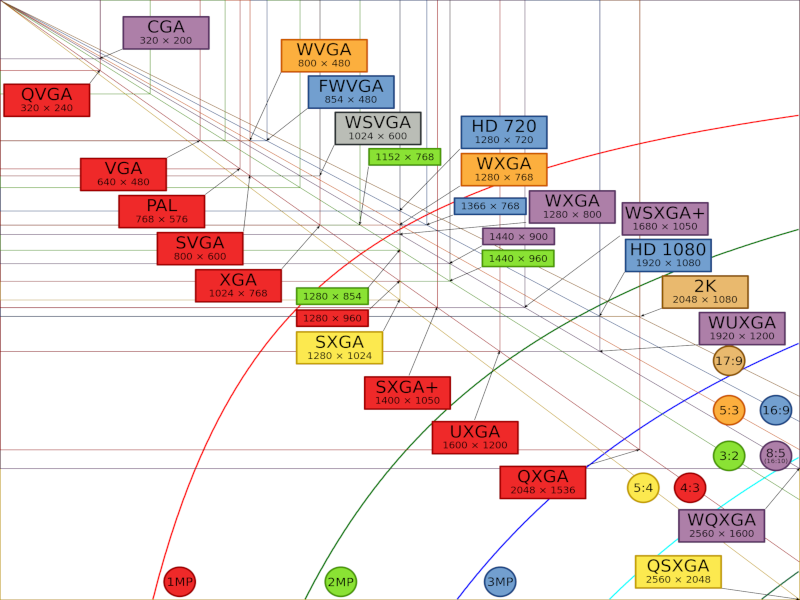

Also, you should know about video/screens resolutions.

Look at those two wonderful pictures that sum things up just nice !

So as of today 2019, we can assume that 1080p is a standard, every TV, PC, laptop or even tablet or phones (huh !) can display 1080p.

Above 1080p, we find 1440p, also called WQHD, that is the intermediate successor of 1080p, before the 4K resolution. Many devices today (especially PC screens) display 1440p or even more.

If you bought a recent TV, it is 4K. If you bought a recent PC or laptop, it is at least 1440p, or 4K. Recent == <= 2y.

The 4K era is here, now, flowing technology reaching us...

1080p is starting (just starting) to become ... has-been. Or to become the standard for low resolution devices (basicaly small factor devices, like phones).

Keep those resolutions schemas in head for further reading, they are important.

A quick article about screen resolutions.

H261/MPEG-1

History. I still remember MPEG-1. The first time I did encode my own video, Windows 95 (or 98 ?), oh yeah !

Used before year 2000, basically for very small resolutions, like VGA, on CRT screens. Its technical basic concepts and algorithms are the root of today video encoders. Still used, but pushed way further.

H262/MPEG-2

History. MPEG-2 used to be used for DVD encoding, and basically from 1995 to 2005. DVDs are MPEG-2, nothing else. It is a good algorithm, as soon as the resolution doesn't go over 720p. DVDs are barely half that resolution (720x480) and still use ~10Mbps for video stream bitrate, which is high (to give my own perceptual scale).

MPEG-4 and the DivX/Xvid era

MPEG-4 is the last MPEG norm. All today video codecs are based on MPEG-4 (some audio as well).

MPEG is hard, hard to understand : it is a jungle. Good luck not getting lost while exploring it. MPEG evolves, MPEG-4 is ~20 years old, but keeps on moving. Get some infos.

DivX codec appeared in 1999. Its open source friend : Xvid, in 2001. I remember studying its source code and even contributing a little bit to it when I was a student.

DivX was known -back at that era- to be able to compress a 4.7Gb DVD content (MPEG-2) onto a single 700Mb CD-ROM (using MPEG-4) with acceptable video visual quality.

Those codecs are still usable today, but only to encode low resolutions (< 720p).

Back around year 2000, XGA was used, then 720p.

Better codecs have been developped since :

MPEG-4 H264/AVC

H264 (also called AVC) is old. It's been designed in ~2005 to support 2005-era high resolutions. Back at this epoch, 1080p (also called "full HD") simply did not exist yet, but was under development and was just about to reach the market.

H264 is efficient for 720p to 1080p, but starting from 1080p (included) and up (1440p, up to 4K or to 8K), H264 is not the solution anymore as better codecs have been developped since.

Today, more and more screens or TV or video playback systems support more and more resolutions at 1080p or above 1080p. Screens are still getting bigger and bigger, and thus still need bigger and bigger resolutions.

Video games, for example. I used to play video games a lot, and still today (since 1988 !). I play on PC and I've been using resolutions above 1080p for years now (mainly 1440p). Video game consoles are always a few steps behind PCs, they only allow you to play in 1080p since a few time, and they don't support yet higher resolutions. But next generations to come will (~2020).

H264 Pros :

- Supported everywhere (as of 2019)

- Not free, but very cheap licencing, can be free-of-charge in many cases.

- Cheap encoding and decoding resources. Any processor from 2005 to nowadays can encode or decode H264 in real time, with no problem. SSE42 is still recommanded.

- Can be embeded into hardware dedicated SOC very cheaply (TVs, Phones and cameras embed such circuitry today)

H264 cons :

- Not efficient starting from 1080p and above : produces very large files or bitrates to keep a visual quality good. 1080p needs at least 10Mbps bitrate to prevent artifacts and color degradations.

- Thus H264 starts being an outdated technology.

H264 has been implemented as open source : libx264.

H264 is used in bluray discs under 4K. That's why a BlueRay -1080p or 1440p- 2h film weighs 25Gb to 40Gb. Crazy.

MPEG-4 H265/HEVC

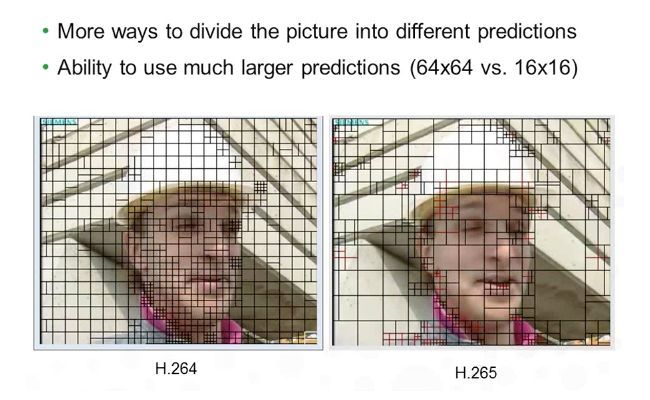

H265 (also called HEVC) is based on H264 algorithms, but it pushes them further mainly to support High Efficient Video Coding for high to very high to HUGE resolutions.

The more the resolution increases, the more efficient H265 will be compared to H264.

At 1080p, H265 is at minimum 30% more efficient that H264. At 1440p, H265 reaches a 40% more efficiency than H264 and expect for 4K a 50% more efficiency. It kicks H264 away, really.

H265 blows away H264. That's incredible.

And one picture can explain why (assuming you got some video coding knowledge) :

H265 is recent, it is to be used today and tomorrow. It has been introduced to market in ~2015 : 10 years after H264, especially designed to support resolutions >= 1080p.

H265 however comes with some drawbacks. Let's see that :

H265 pros :

- A compression algorithm that is way better than H264. You can expect files being 30 to 50% less size, for the same visual quality - even more than that if you approach 4K. That is : dividing by a factor of ~2. For streaming, that means you'll need 30 to 50% less bandwidth to push your content to your clients, compared to H264. Huge.

- Suited for very high resolutions. 4K or 8K will use H265, H264 is a no-go at this level. Even 1440p - the resolution between 1080p and 4k - benefits a lot from H265 compared to H264.

H265 cons :

- Its licencing is both complex, and way more expensive and less permissive than H264. This subject is evolving. Learn more about H265 licencing here.

- The computing power needed to decode H265 is about 20% more compared to H264, and this barrier grows as the resolutions grow.

- The computing power needed to encode H265 is about 30% more compared to H264, and this barrier grows as the resolutions grow.

- Not many embeded circuitry exist today, because of the complexity of the underlying algorithms and thus the cost to implement them embeded. Embeded SOCs are however starting to appear more and more as of end 2019.

- Less devices support H265 than H264 (TVs, cameras, etc...) mainly because H264 is a 15-year-old technology (mastered) and H265 licencing are not welcoming.

H265 has been implemented as open source : libx265.

H265 is slowly coming into devices. I bought a new TV set last year (2018), and I did put all my attention to buy one that supports H265 natively. Not many of the models supported H265. Buying a TV set today (2019) that only supports H264 but not H265 is a mistake - at least in my opinion.

H265 is used in BlueRay when they reach 4K. Some 1440p blueray can be found encoded in H265 as well. The blueray players embeds an H265 decoding circuitry.

If you want to decode H265 in real time, you'll need a CPU with AVX support at minima, AVX2 being strongly suggested. The higher the resolution, the higher the CPU cost.

If you want to encode H265 in real time, you'll need a CPU with AVX2/FMA at minima for 1080p and above, or even better : a GPU (more on that later).

Obviously if you need to encode at low resolutions, 720p or even lower, you will need way less computing power. Remember that the less pixel you have to treat, the faster it will be. You even could use H264 for low resolution content : H264 encoding and decoding is really lighter in hardware usage that H265.

Others, like VP9 or AV1 (non-MPEG)

I don't have much experience with VP9. VP9 is a competitor of H265 that claims itself being "free". It can achieve better compression than H265. I did test it... Ouch.

It has no GPU encoding support yet (but decoding), and about the CPU... WOW.

I just could not handle the load with my hardware. Real time encoding VP9 requires server-dedicated processors, such as Xeon, and I only own public-market processors : Intel Core.

Perhaps, with the last generations of Intel Core (9th, as of end 2019) you could achieve such a task ? Even not sure. Ping me if you got some infos.

So, VP9 is shinny, I did encode with it (not in real time), and I effectively see a ~15% improvement in size/bitrate compared to HEVC, but it takes really ages to encode a video segment on software (traditionnal recent 2018/2019-era CPU hardware). That's a no-go for real time streaming content (once more, in my case).

AV1 is shinny, and so promising. I'm looking forward ! It should be available - as stable - soon. You may try it yet (unstable, but well advanced). Read more info about it, it could be the next leading codec taking H264 comfortable seat.

MPEG-5 EVC

This is to be the future of H265, by MPEG.

Infos from January 2019 can be found here.

Want some numbers ?

I did some experimentations, but I don't share the files because I don't know about the rights for that.

Testing video quality is mainly a human appreciation. You should do your own tests with your own eyes and acceptance conditions, but ...

A 1440p average-scene video (with movement) of 1 min at 60FPS (with no audio data) costs :

- 14.8Gb uncompressed RAW video YUV.

- 107Mb in MPEG1 at 15Mbps CBR

- 87Mb in MPEG2 at 10Mbps VBR

- 36Mb in H264 at 5Mbps VBR

- 25Mb in H265 at 3.5Mbps VBR

- 34Mb in VP9 at 3.5Mbps VBR

All, for the same visual quality. Impressive isn't it ?

I did not go far in the codec settings. Medium preset for everybody.

I don't know why on this video, VP9 produces worse results than H265. To Be Investigated and pushed (codec settings).

My live streaming implementation

So to go back to my needs : I got limited upload bandwidth, and I want to stream in real time some high resolution content (1440p actually, above in the future, perhaps 4K ?).

I could simply not go with H264 (too high bitrate to keep a correct quality), nor VP9 (I haven't got the hardware to encode it in real time).

I chose H265.

The problem with H265 is that it is NOT compatible with RTMP/FLV. Adobe never patched RTMP to support it. Some unofficial support may be found (I did not find any, ping me if you got clue about H265 into RTMP), but I need to find alternatives.

That's why as of today, you can't stream to Twitch using H265. I don't know about others, but as you can't pack H265 video streams into RTMP...

As of today, H265 can not be carried by RTMP.

Think about the containers also. H265 can only be packed into MPEG-TS if you want to stream it (to my knowledge, here again if you have some more infos, please share).

Other problem : To encode H265 in real time 1440p, you'll need a GPU. It can be done with CPU, but you will need a very advanced one from server pro range. Maybe Intel gen 9th are strong enough ? I doubt.

So let's go for GPU encoding.

But, if the GPU is used to encode the stream, it cannot be used to play the video game at the same time... ? Until one owns the latest Nvidia RTX/Titan on the market (not my case); and that is even not sure (infos ?).

So I dedicated the encoding process to another machine, with a Nvidia GPU.

I don't use AMD hardware. Intel for CPU, Nvidia for GPU.

The publisher : my playing machine

Playing a PC game at 1440p and above 60FPS needs a good hardware, but it obviously needs that such a hardware is dedicated to gaming !

If the hardware must handle the game and encoding of the stream, we'll run into a problem. Real time encoding H265 at high resolution while playing ... seems hard (until you put $3K on the table for the latest RTX or Titan from Nvidia).

Unix thinking here again : "one device/program for just one task, but do it right and efficiently".

My playing machine would just need to push the raw video frames and audio to an encoding machine. Raw means uncompressed frames directly read from the GPU output rendering buffers.

The software I use is OBS. Pretty well known software built on top of the excellent FFMPEG.

My gaming machine runs Windows with Intel CPU and Nvidia GPU that allows me to enjoy gaming sessions in 1440p at 60FPS minimum (90FPS average).

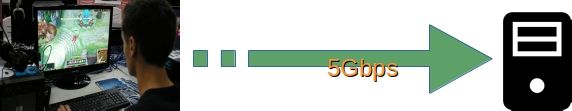

However, do you know how many bandwidth you need to carry 1440p frames and the sound, in uncompressed format, to some encoding machine ?

The answer is : about 5Gbps. For 4K, it reaches 7 to 8Gbps.

And my home network is limited to 1Gbps. We got a problem here. This problem has two solutions :

- Upgrade the network to 10Gbps to allow the playing machine to send in real time uncompressed 1440p frames and sound (~5Gbps needed) to the encoding machine.

- Compress the stream with a light compression algorithm to reduce its bandwidth to the network capacity : 1Gbps here.

Actually, I chose solution 2, solution 1 will be implemented in the future (by updating this blog post I think).

So the playing PC cannot send raw stream to the video-treatment machine :-(

I need to compress to go under 1Gbps.

If I still want to play with no resource problems, I need a fast compression algorithm, I chose ... H264 yes !

My gaming machine compresses the stream in H264 using hardware GPU.

With recent GPU, you can enjoy playing in 1440p at >= 60FPS while encoding the frames using GPU H264 encoder in real time. But for H265, you would need a very strong GPU, very costly. Do not count on your CPU for such tasks (until you run a very strong Xeon ?).

I chose an H264 output of ~100Mbps video bitrate. At such a rate, the image quality is perfect. The encoding stack will receive a perfect quality (but still compressed) video stream that it will then reprocess-recompress and/or downscale.

Remember that the network capacity between the playing machine and the encoding machine is 1Gbps (local LAN).

A word about sound : the audio stream? Audio, represents about 1% of the load (even <1%), video being the 99 other percents. So I don't talk about audio. Audio compression is efficient, it is really light as any CPU of any kind can compress any sound to any format just easy in real time (70x real time with my current CPU). It is not a problem, it is negligible and thus I don't really bother detailing it.

The sound is compressed by my gaming machine using classic AAC, nothing special, that should eat about ... 1% of my CPU unit (busy running a heavy game) ?

The gaming machine will make use of RTMP to send the streams to the video treatment machine. The muxer used is FLV.

I push frames using RTMP to the encoding machine, like I could directly push those to Twitch. But not in H264 100Mbps right ? Twitch limits to 8Mbps, and with 8Mbps of H264, you barely have something acceptable for 1080p... I stream in 1440p, that would require about 13 to 15Mbps using H264 (for good average visual quality) !

The encoding machine

Haha. Not a monster, no no no. It is equipped a pretty old CPU, but ... Also a GPU.

Real time encoding H265 is not that a big deal, if you use a GPU. If you use a general purpose CPU however, you'll need a monster.

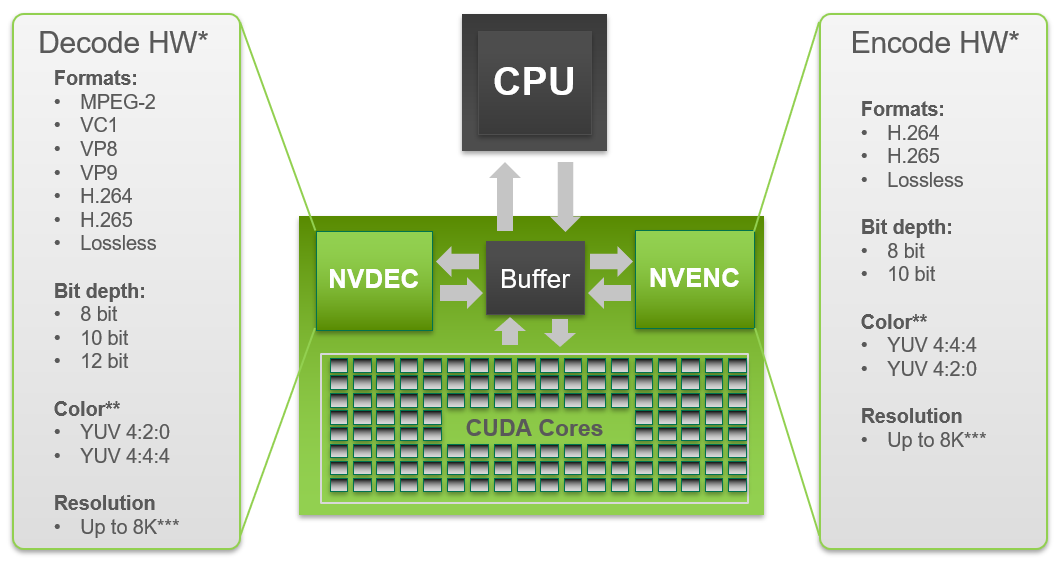

I encode using an old Graphic unit of mine : Nvidia GTX 970.

It is an old reference from ~2014. But GPU are so much more efficient for this kind of tasks (video compression), that this GTX 970 actually produces 2 parallel H265 streams in real time, yes it does !

Amazing.

- 1 full resolution 1440p with a chosen bitrate of 6Mbps.

- 1 HD 1080p with a chosen bitrate of 4Mbps.

- 1 future SD 720p ?

- 1 future LD 320p ?

Nvidia GTX 970 is old as it is even not in the NVENC Support Matrix any more. This matrix is important, look at it if you plan to encode using a GPU. You can see that some models have more parallel units than others. A criteria of choice I always look at when I change my graphic card unit.

I remember Nvidia GTX 970 being equipped 2 HEVC NVENC units, thus, 2 stream qualities produced in real time.

I encode using the fast preset, but that's way enough for my needs.

A preset is a set of options to some values. The "fast" preset advantages the resource effort, against the quality in the video coding schema.

So the encoder is named NVENC and it changed my way of encoding videos. NVENC is already ~5 year old released on the market.

Nothing to compare with CPU, it just blows all CPUs away, really. It is amazingly fast, that's crazy compared to a recent CPU.

I've been encoding videos - not in real time but mainly movies - since 1998.

To encode H265 with a Nvidia GPU, you'll use hevc_nvenc. Here are its options. Do not confuse with x265, which is the open source implementation of H265 encoding using general purpose CPU. x265 does not provide the same options as hevc_nvenc.

GPUs are not only good at cracking passwords or mining bitcoins ;-)

The processing softwares I used, are FFMPEG and VLC. FFMPEG and VLC both are the swiss-army-knives of video/audio processing, I'm sure you know about them, they are both well known open source softwares.

You don't need anything else, to process, consume, stream, split, join, resize, combine ... video and audio streams , but FFMPEG and VLC. Ah, you need the hardware as well ;-).

FFMPEG serves as an RTMP server, receives the realtime live stream (audio and video) from my gaming PC, passes it to NVENC units, and pushes back the output to VLC. Audio is not processed in anyway, but copied from input to output as-is.

Here is the command line used :

ffmpeg -listen 1 -re -c:v h264_cuvid -vsync 0 -i rtmp://192.168.0.4

-max_muxing_queue_size 9999

-c:a copy -c:v hevc_nvenc -vf hwupload_cuda

-preset fast -rc vbr -b:v 6M -maxrate 6M -bufsize 1M -g 60 -sc_threshold 0

-f mpegts udp://127.0.0.1:11440?pkt_size=1316

-c:a copy -c:v hevc_nvenc -vf scale=1920:1080,hwupload_cuda -preset fast

-rc vbr -b:v 4M -maxrate 4M -bufsize 1M -g 60 -sc_threshold 0

-f mpegts udp://127.0.0.1:11080?pkt_size=1316

I'am an RTMP server listening for a connection from the gaming machine that wants to stream some content. The content is encoded in H264 if you followed this blog post in order, so I use the NVENC H264 decoder to decode the video stream using the GPU. This operation is light, it barely takes about 5% of the GPU at maximum.

Then, I encode using NVENC HEVC, the H265 encoder from Nvidia. I use the fast preset, that's enough and the GPU is pretty old, let's not ask it too much. I join that with the audio input, and don't touch it at all.

I mux the encoded two streams using MPEG-TS, and send them to a port on local machine using raw UDP. This end, is listened by a VLC instance.

What is VLC doing here ?

Well, remember how streaming works ?

I want people to come to me, act as clients, and ask for a stream content. That content should then be pushed to them.

This is what RTMP does, just in place; But remember ? RTMP is not compatible with H265. So, what to replace it ?

There are not many answers to that question I found.

RTSP is one.

Do not confuse RTMP and RTSP, really not the same even if the final goal is the same !

But RTSP has a drawback. It works by pushing the stream to the client using RTP over UDP. That means it can't pass firewalls nor NATs.

The exact same problem that SIP faced. So, as I don't want to bother with STUN and other NAT traversal technics, I pushed my reflection further.

We can use HTTP. I was not aware of that until I studied VLC documentation and source code.

But nothing prevents HTTP to carry .... well , anything you know.

HTTP, as soon as the client initiates the connection, can push anything, and VLC implements an HTTP server to push multiplexed content, mainly using MPEG-TS multiplexer.

Nice, here is the command :

cvlc -v udp://@127.0.0.1:11440 --sout "#standard{access=http,mux=ts,dst=192.168.0.4:11440/1440}"

cvlc -v udp://@127.0.0.1:11080 --sout "#standard{access=http,mux=ts,dst=192.168.0.4:11080/1080}"

So VLC gets the output from the FFMPEG encoding process. This output is internally demuxed, then re-muxed by VLC still in MPEG-TS, with one video stream in H265 together with the audio stream. VLC then creates an HTTP server, waits for clients to connect, and as a response pushes the entire compressed stream bytes. And guess what ? That just works, like a charm.

Here is the dump decoded as ASCII. Simple.

GET /1080 HTTP/1.1

Host: the-jpauli-stream

Accept: */*

Accept-Language: fr

User-Agent: VLC/3.0.8 LibVLC/3.0.8

Range: bytes=0-

HTTP/1.0 200 OK

Content-type: application/octet-stream

Cache-Control: no-cache

G@d.........

1.Y./..Y./....@......@.............{. ....B...@.............

{..........%.............<`..Q...H....@....D..........F.P....@

..@.............{. ....B...@.............{.......G.d....%....

************** video data ****************

The only problem of HTTP is that it's not been designed to transport real-time video content. That means that it has no way of dealing with flow interruptions or network latency / jitter.

You can see that the video content is answered directly after the request. There is no packet chunking, this is HTTP.

But HTTP seats on top of TCP, and TCP will chunk and recover for us. The problem is that TCP is too heavy for such a content, that's why it should be published over the lighter but more appropriate RTP seating on top of UDP and adding out-of-order corrections, jitter correction, and light ACK mechanisms through the usage of RTCP.

You should just study simple dump of RTP, and you'll understand how it fits way better such a need.

But still, the goal is accomplished because :

- I don't care about your mobile phone, not my public here.

- I don't care about your poor jittering Internet connection, we are in 2019, that should not happen.

- I don't care you use wifi to read my stream, instead of much more reliable cables.

- In conclusion, the Internet network - as end-to-end - between my streaming server and my clients, should be free of any failure. Failures will make TCP retries, and thus pause the video until TCP resumes the correct flow. Client high buffering can prevent many failures.

Reminder : One client would then need 6Mpbs download bandwidth to read the full 1440p resolution stream, or 4Mbps for the downscaled 1080p version.

The total measured delay between an action on the source machine, and this action viewed by a receiver on the Internet, is about 3 seconds, assuming European viewer with good enough bandwidth and clear network path. Not bad, that stays in the limit of "real time", to my thought at least.

The routing and front machine

Ok the last piece is just about routing those streams to the outside Internet.

As usual, and since so many years now, I use HAProxy as L7-L4 router.

The goal is to have URLs that are user friendly, like this :

Public URLs, the real ones, are not delivered here.

Etc.. Each URL being connected to the VLC HTTP server of the correct stream.

That's few lines in HAProxy configuration :

defaults

mode http

option splice-auto

frontend front

bind *:80

bind ipv6@:80

acl stream_dom base_dom the-jpauli-stream.tld

acl stream_1440 path_dir /1440

acl stream_1080 path_dir /1080

acl stream_720 path_dir /720

use_backend stream_1440 if stream_dom stream_1440

use_backend stream_1080 if stream_dom stream_1080

use_backend stream_720 if stream_dom stream_720

default_backend none

backend stream_1440

server srv 192.168.0.4:11440

backend stream_1080

server srv 192.168.0.4:11080

backend stream_720

server srv 192.168.0.4:1720

backend none

Done. Notice the port mappings :-)

So. I actually use 6Mbps and 4Mbps streams. My output total bandwidth from my ISP is between 300 and 400Mbps. With full 1440p streams, I can serve 300/6 = 50 clients, with no bandwidth problem. For 1080p stream : 75 clients.

Not that bad is it ?

Yes, fiber based Internet connection is needed. I do benefit from it. Very stable. ISP is Orange.

As I don't expect that number of viewers, I did not implement resource limiting. I could do that at two levels :

- Directly into the input router

- Into HAProxy

I use Mikrotik as router. I don't have the answer here in my head, but I don't smell any difficulties in doing that in RouterOS. It would be however wrong as acting on the network for a service resource problem, is not the right answer.

For HAProxy, not hard. We could answer 503 or 429 with a Retry-After in such an overload case ?

Conclusions

Once more, the Internet is an open media. Why would everyone put its eggs in the same basket, using middleware platforms that restrict their liberties and can watch and do what they want with the content you send ?

I don't want my stream to be tagged with ads. I don't want my stream to fall if the middleware falls. I don't want the middleware to intercept my stream, record it, or even change its content... (what prevents them to ? I send my content to them, so ... ?).

I want to stream whatever I want using just my stack and Internet connection.

Ok DIY also has drawbacks, and is not the magic answer to every question but... I've been an Internet pioneer (well, some kind of ?) and am a computer scientist. I tend to build stuff myself - the way I do want - and not the way someone else imposes me to.

Also, the Internet has not been designed to be asymetric like it is today. That creates tons of problems, because it creates very unbalanced flows of data, whereas the International Network has been designed with balance in mind : everyone should be able to publish everything, to everyone.

I've not been using the Internet to give my data to someone, and ask him to share it to others. I can do it myself, I don't need you.

That does not mean I don't stream through other external providers. I use Twitch like I mentionned. All that means is that I got my own streaming stack, and I may use other's stack if I want to. Simple forward to them, while streaming with my own output. I'm not closed minded, just showing you that all involved technologies are open and accessible, not the property of one or two companies.

Use open source, keep your mind open, and fight against every little thing/soul that tries to convince you the opposite ;-) Do it for the Internet.